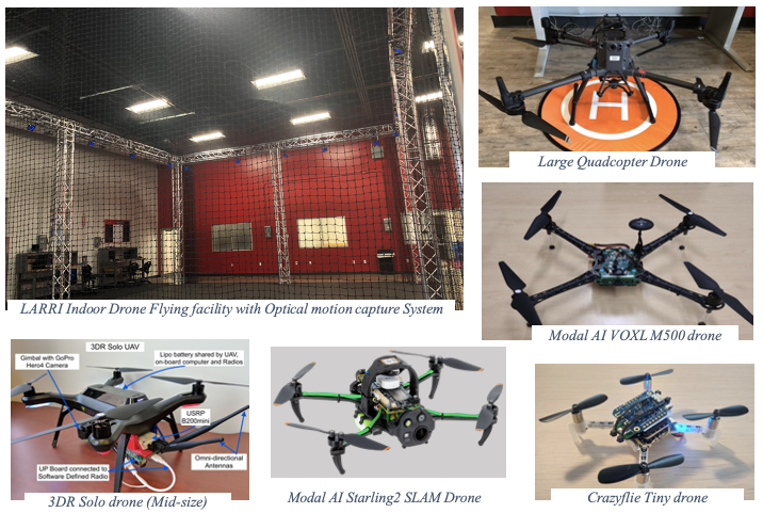

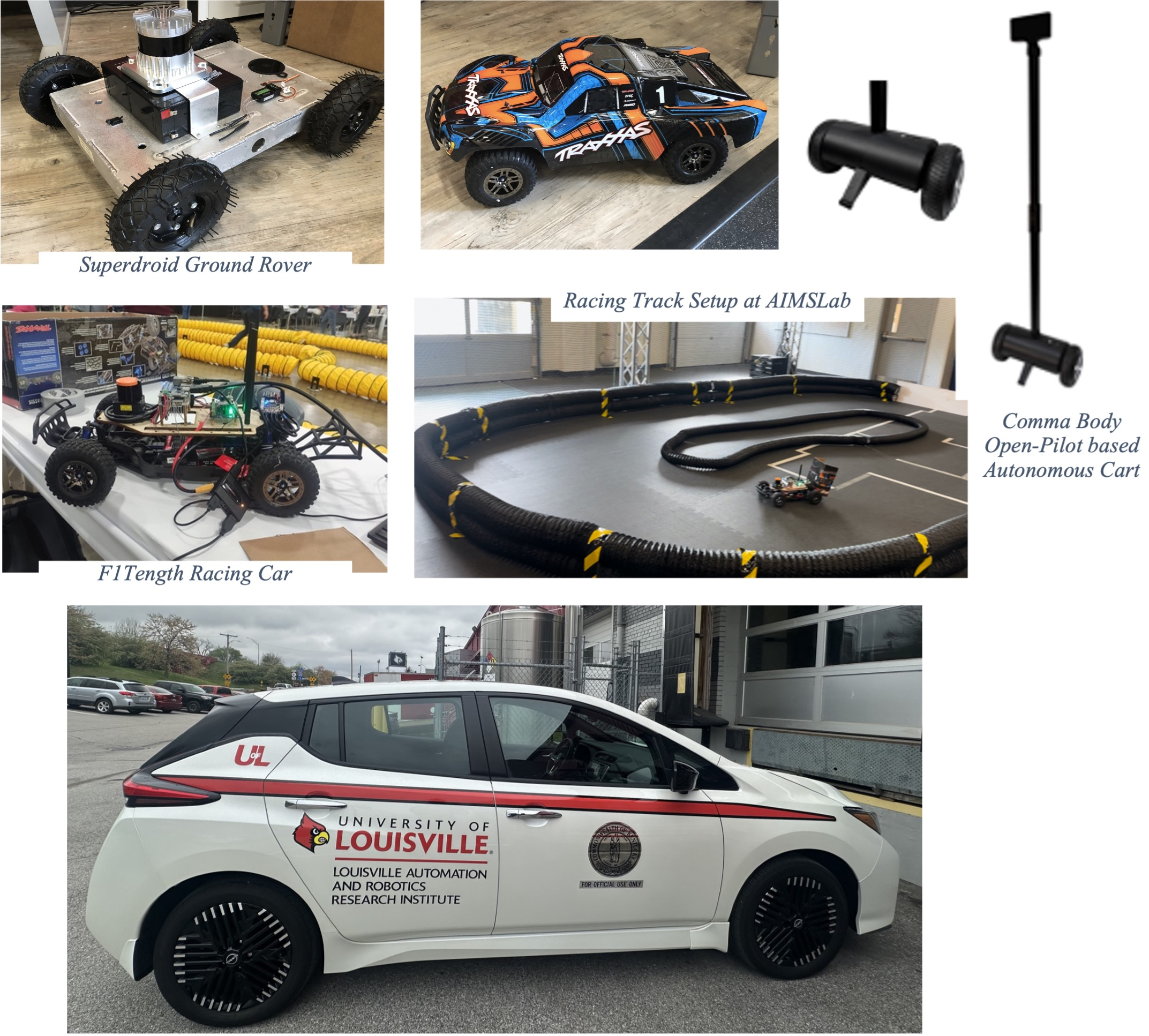

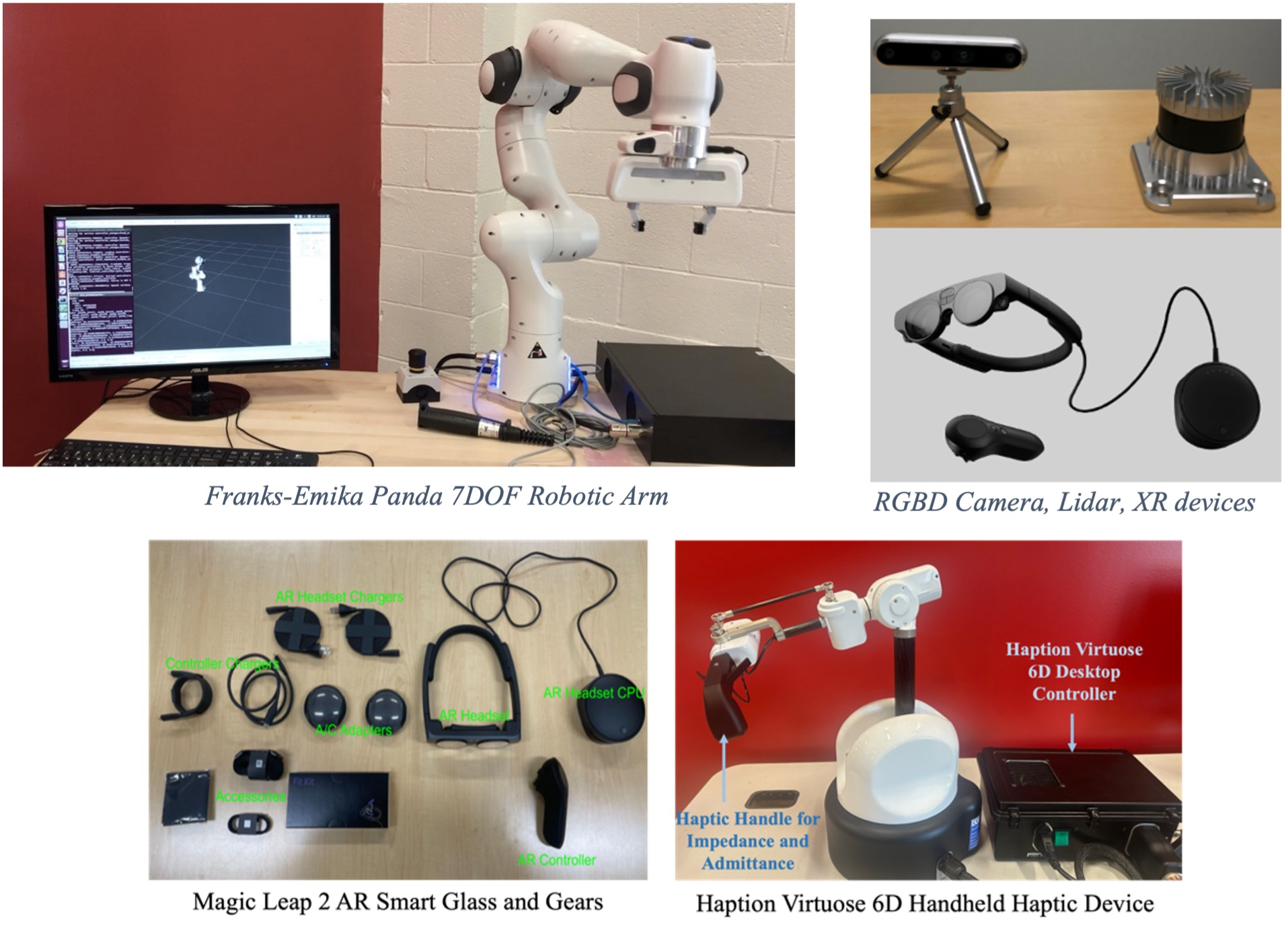

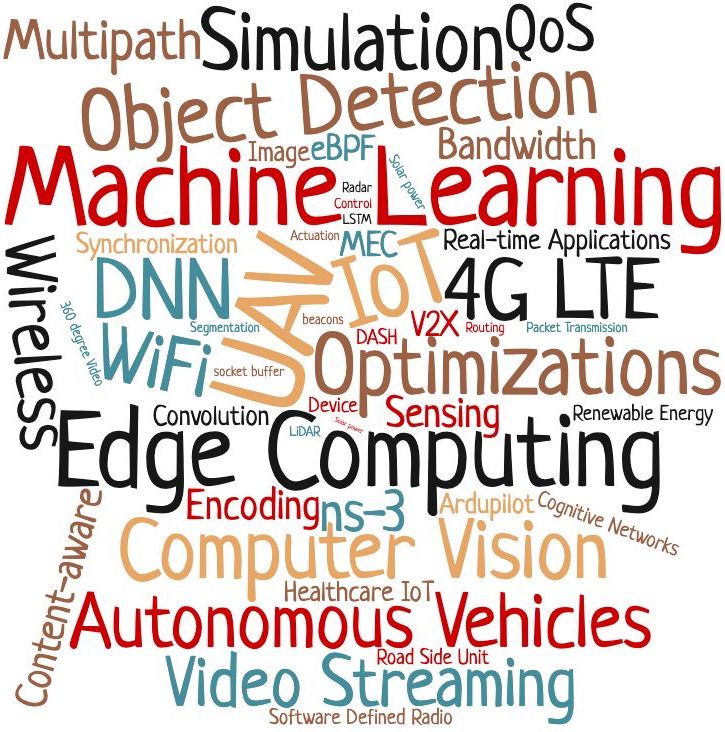

The Autonomous Intelligent Mobile Systems Laboratory (AIMSLab) was established in 2021 as one of the major labs at the Louisville Automation and Robotics Research Institute (LARRI) at the University of Louisville (UofL). AIMSLab housed in the LARRI aims at conducting advanced studies and development of autonomous and Intelligent systems including unmanned aerial vehicles, ground rovers, connected and autonomous vehicles, industrial robotic arms, metaverse applications, and Wireless and Mobile innovations. This is done through novel integration of sensing and intelligent decision-making in the space of collaborative robotics using AI and adaptive sensing, as well as the exploration of multi-level edge and cloud computing capabilities. AIMSLab provides a very open, inclusive and collaborative environment and embraces researchers from different background, culture and demography. It provides a cutting-edge facility equipped with a lots of mobile robots, sensors, systems and tools and connects with several other labs located at LARRI for close collaboration with other engineering departments and also the school of medicine for healthcare related research.

| Faculty/PI (Lab Director) | |

|---|---|

|

Dr. Sabur Baidya

Assistant Professor, Computer Science & Engineering, University of Louisville LinkedIn: LinkedIn Email: sabur.baidya@louisville.edu Website: Website Research Area: Edge Computing, Robotics, Wireless Networks |

|

| Graduate Students | |

|

Mohammad Helal Uddin

Ph.D. Student, CSE, UofL LinkedIn: LinkedIn Email: helal@example.com Website: Website Research Area: Neural Network Compression for Resource-constrained Cyber-Physical Systems |

|

|

Narges Golmohammadi

Ph.D. Student, CSE, UofL LinkedIn: LinkedIn Email: narges@example.com Website: Website Research Area: Intelligent and Optimized Next Generation Wireless Communication in Robotics |

|

|

Ashutosh Prakash

Ph.D. Student, ECE, UofL (Co-advisor) LinkedIn: LinkedIn Email: ashutosh@example.com Website: Website Research Area: Haptic Enabled Digital Twin based Teleroperation in Robotic Arm |

|

|

William Arnold

M.S. Student, CSE, UofL LinkedIn: LinkedIn Email: william@example.com Website: Website Research Area: Side Channel and Systems Security in Industrial Robotics |

|

|

Raju Garuda

M.S. Student, CSE, UofL LinkedIn: LinkedIn Email: raju@example.com Website: Website Research Area: Integrated Co-simulation of Robotics and Software-Defined Radios |

|

|

Luke Rappa

M.S. Student, CSE, UofL LinkedIn: LinkedIn Email: luke@example.com Website: Website Research Area: Enhanced Anomaly Detection with Deep Learning for Smart Agriculture |

|

|

Basar Kutukcu

Ph.D. Student, CSE, University of California San Diego (External Advisor) LinkedIn: LinkedIn Email: basar@example.com Website: Website Research Area: Hardware Software Co-design and Optimization for Deep Learning on Embedded Systems |

|

| Undergraduate Students | |

|

Liam Seymour

B.S. Student, Double Major (ECE and CSE), Western Kentucky University (REU Intern) LinkedIn: LinkedIn Email: liam@example.com Website: Website Research Area: Large Language Models on Resource-constrained Cyber-Physical Systems |

|

|

Alwin Rajkumar

B.S. Student, CSE, UofL LinkedIn: LinkedIn Email: alwin@example.com Website: Website Research Area: Deep Multi-task Fusion for constrained robotic systems, e.g., UAVs |

|

| K-12 Students | |

|

Saveer Jain

K-12 Student, Dupont Manual High School LinkedIn: LinkedIn Email: saveer@example.com Website: Website Research Area: Robotic Security, Tactical Sensing |

|

| Alumni | |

| |

Some past and present projects are listed here: Projects

| Current Research Projects | |

|---|---|

|

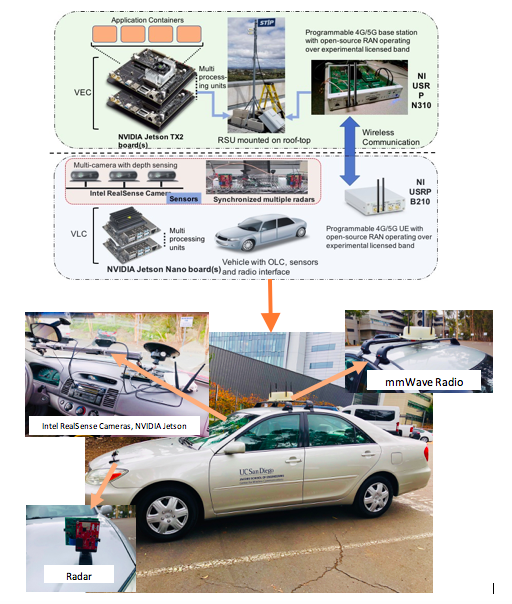

Collaborative Perception over Vehicular Edge Computing

Developing efficient task partitioning algorithms and real-time multi-sensor fusion for improved collaborative vision in smart transportation systems. Features a prototype testbed with smart vehicles and edge computing nodes. |

|

Design Space Exploration for ML Applications

Conducting design-space exploration for software-hardware co-design of application-driven adaptive computing systems, focusing on optimizing power, area, and speed while maintaining high accuracy and low latency. |

|

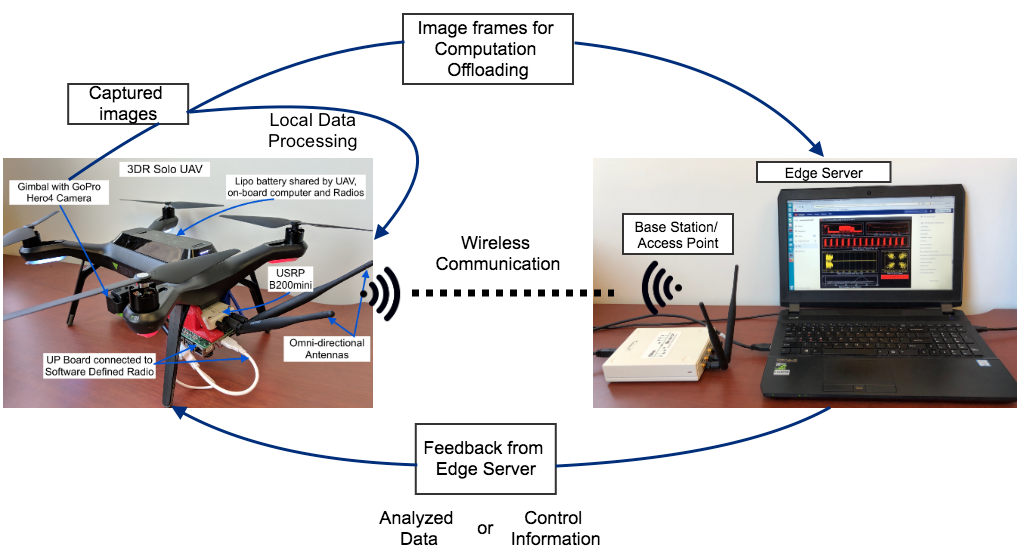

Resilient Computation for UAV Systems

Developing "Hydra", an architecture for flexible sensing-analysis-control pipelines over autonomous airborne systems, introducing the concept of information autonomy for edge-assisted UAV applications. |

|

Renewable Energy-driven Vehicular Edge Computing

Creating machine learning models to predict small cell operation time based on communication traffic, computing demand, and environmental factors, maximizing renewable energy utilization. |

|

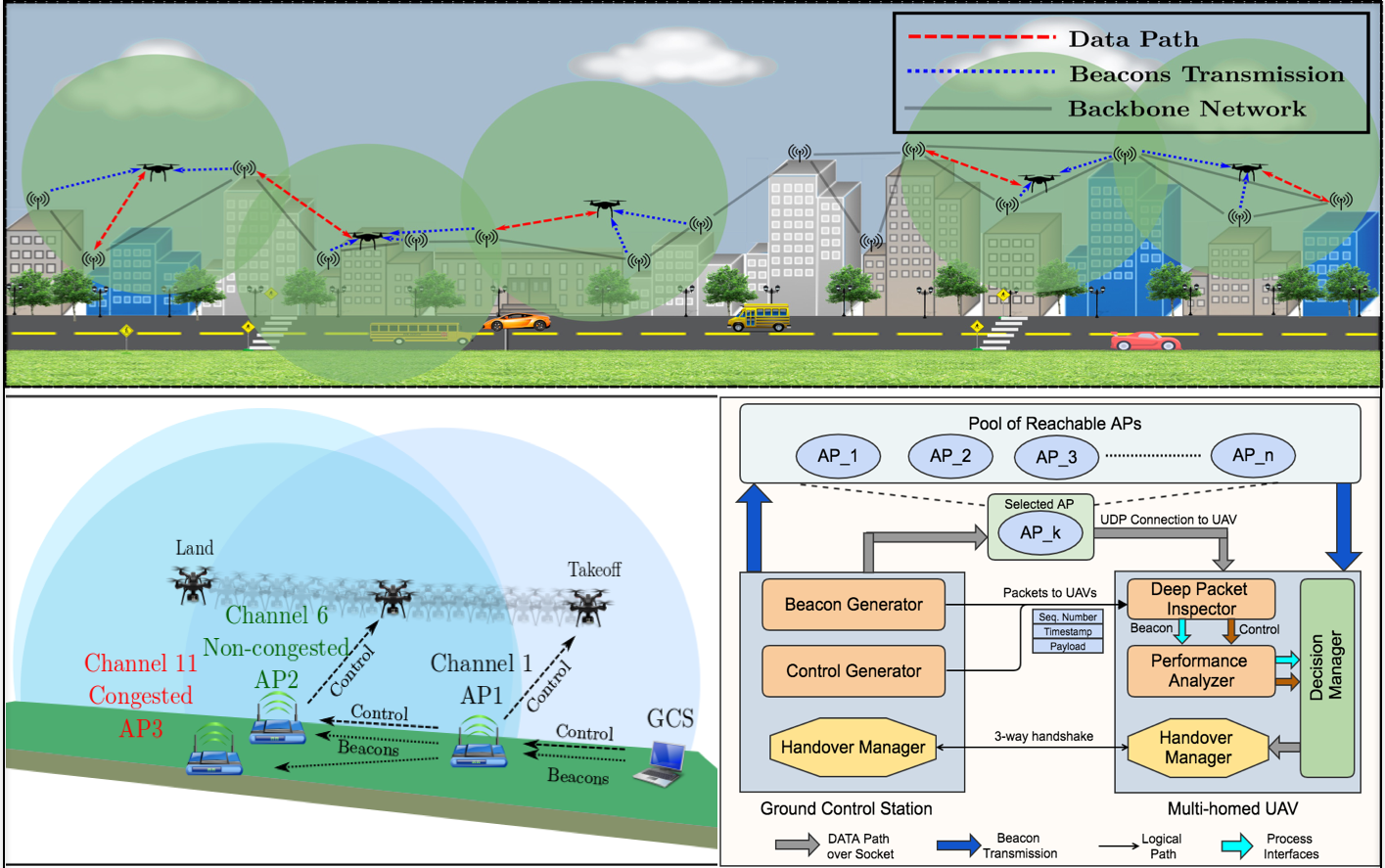

Robust UAV Communications

Designing a robust multi-path communication framework for UAV systems, featuring a fully open-sourced integrated UAV-network simulator called "FlyNestSim". |

|

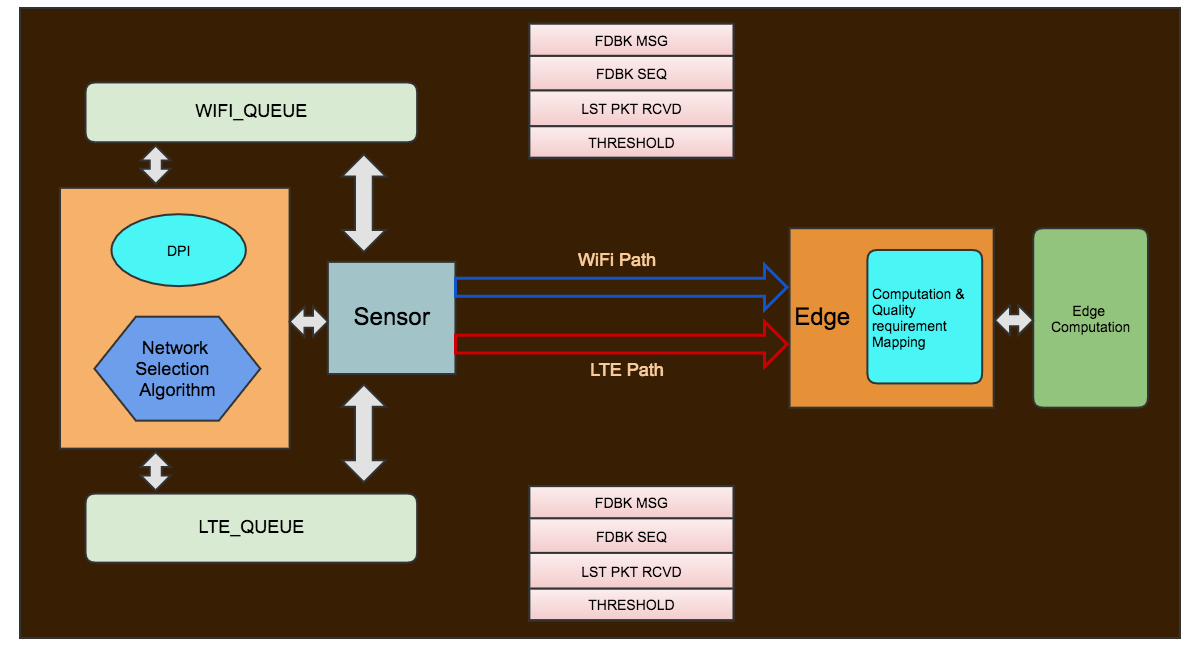

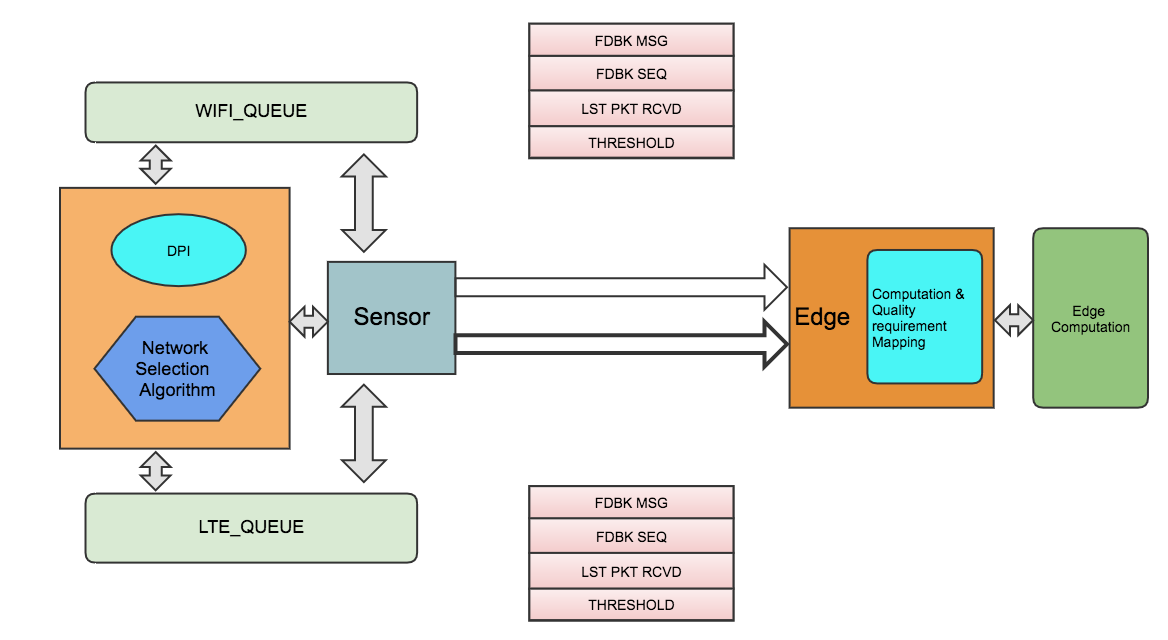

Software-Defined Edge Computing

Developing a content and computation-aware communication control framework based on SDN paradigm, using extended Berkeley Packet Filter (eBPF) for efficient multi-streaming. |

|

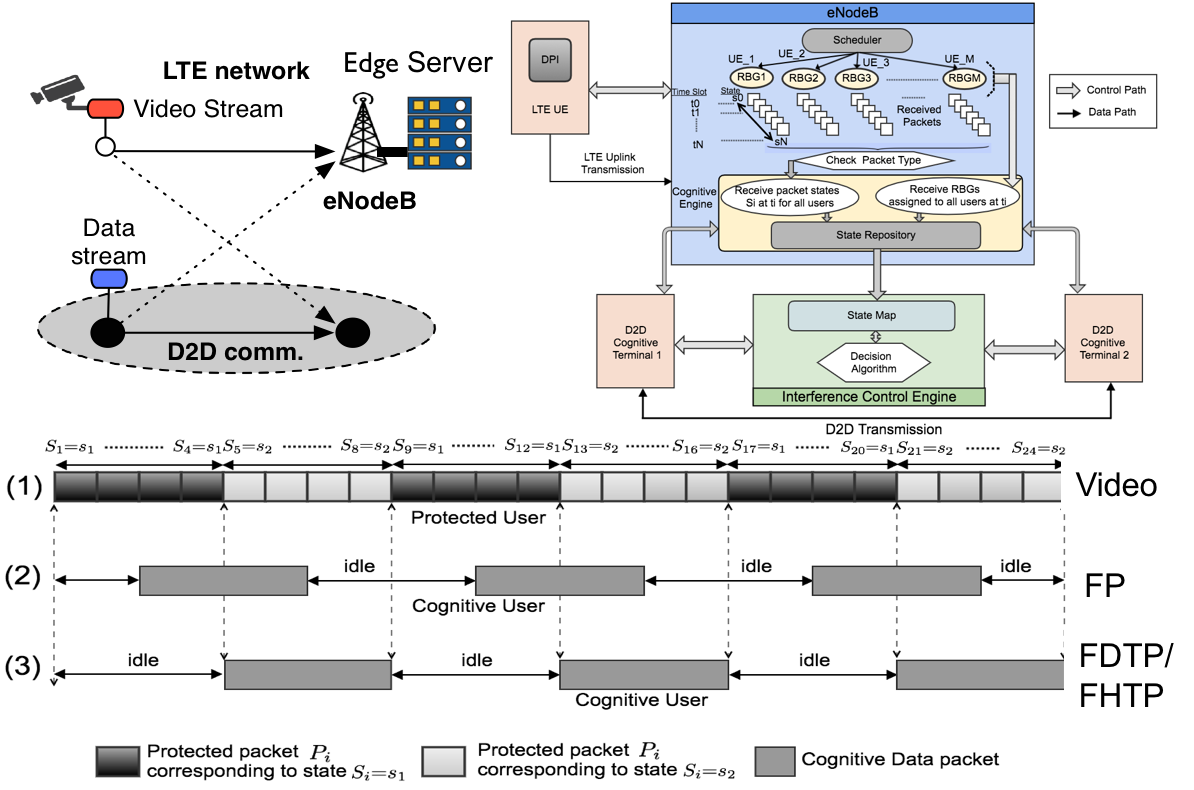

Cognitive Interference Control

Creating a cognitive interference control framework for heterogeneous local access networks in urban IoT systems, optimizing transmission patterns for improved throughput and accuracy. |

For a complete list of publications, please visit our publications page.

AIMSLab inhabits a large high-bay space at LARRI, which is optimized for indoor aerial robotic flight and ground vehicle control. High-precision tracking and high frequency control loops are facilitated by an optical tracking system using 22 Optitrack cameras that provides over 12,000 cubic feet of test room with millimeter-precision reporting. The entire netted space is remote controlled with sliding panels which opens to provide space for other research, e.g., mixed reality metaverse and experiments with ground robots. The positioning results, within 1mm, can be published to a ROS topic at a rate of one update per several milliseconds. The entire system latency has been measured to less than 7.5 milliseconds. This permits testing of flight control algorithms in a controlled, indoor setting without requiring the setup and coordination of an outdoor GPS-assisted flight. Furthermore, it accelerates development of low-cost LiDAR-based imaging as indoor-rated sensors can be used for evaluation. An automatic, retractable netting system that encloses the cage at all faces permits safe operation for researchers, while allowing unimpeded access when aircraft are not in flight.